How to Conduct a Cybersecurity Assessment

Get a clear view of security performance so you can decide where to invest, demonstrate compliance, and keep operations running. This guide walks you through each step — from scoping to ongoing measurement — and helps tie security work directly to business outcomes.

What is a cybersecurity assessment?

A cybersecurity assessment systematically reviews security controls, related processes, and the results those controls produce. It finds gaps, assigns a maturity level, and creates a list of actions that link directly to enterprise risk. By connecting technical findings with business objectives, the assessment turns security data into usable input for governance and budgeting.

Why perform a cybersecurity assessment?

An assessment shows where security effort reduces business risk most efficiently. It provides evidence of compliance with contracts and regulations, clarifies supplier exposure, and provides a solid foundation for prioritizing future spending. Executives get a concise view of risk‑reduction opportunities, which supports budget approvals and board reporting.

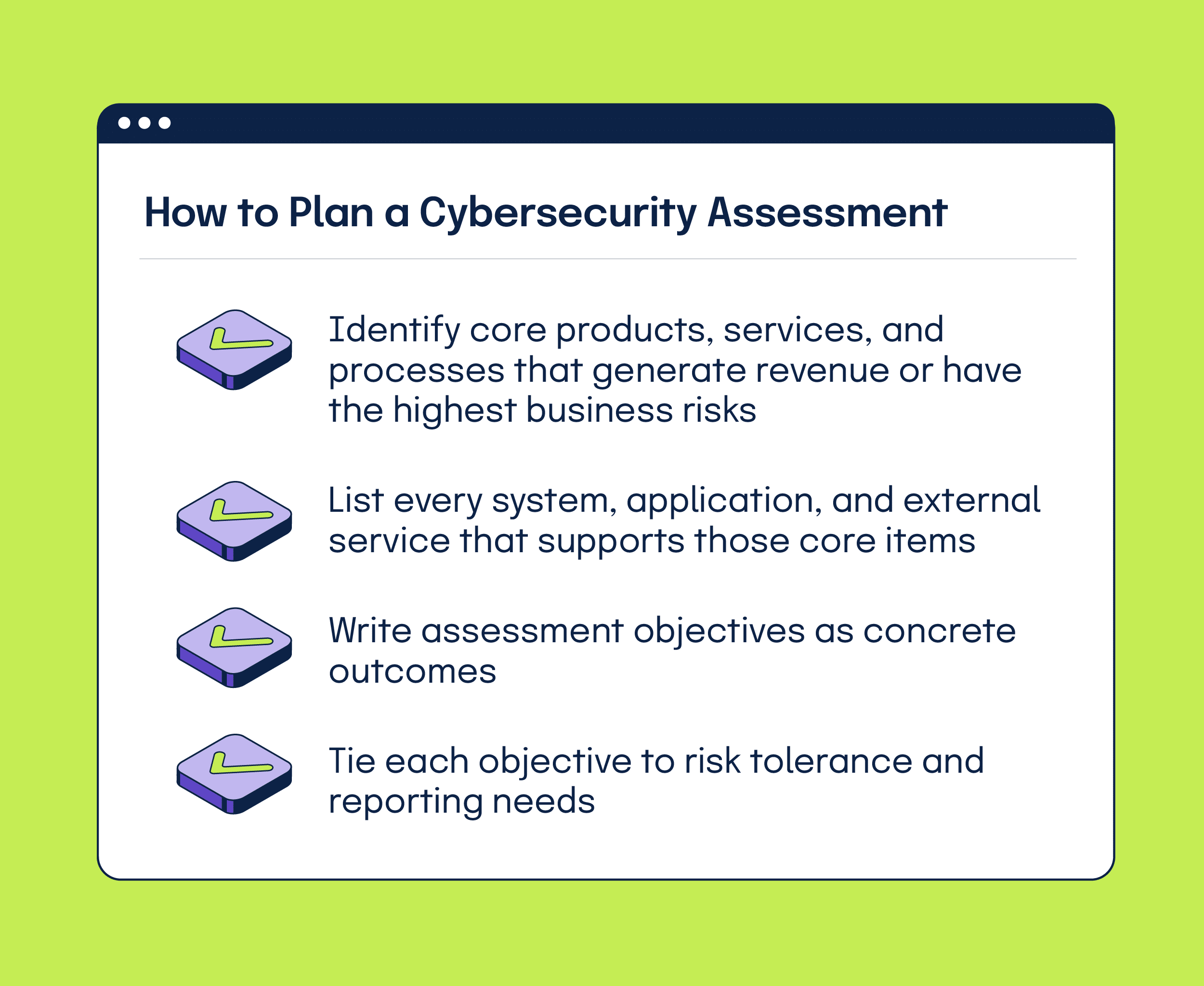

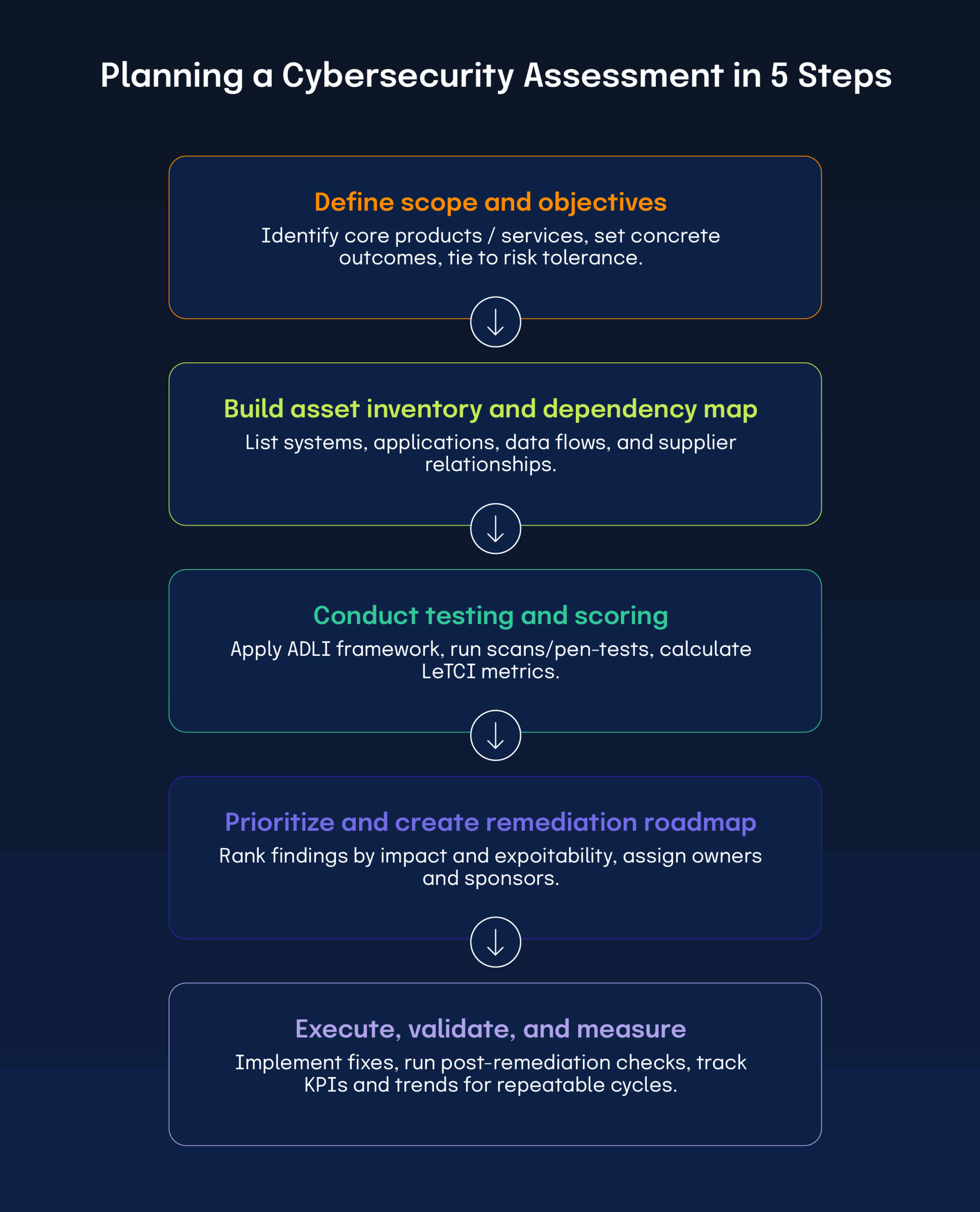

How do you plan the assessment?

- Identify core products, services, and processes that generate revenue or have the highest business risks

- List every system, application, and external service that supports those core items

- Write assessment objectives as concrete outcomes, like validated control effectiveness, a prioritized risk map, or an executable remediation plan

- Tie each objective to risk tolerance and reporting needs so the final report meets governance requirements without extra work

Plan to gather additional stakeholder input on regulatory obligations, contractual clauses, and expected reporting formats. Mapping these early prevents later re‑work when preparing findings from the assessment.

How do you organize the assessment team?

A cross‑functional team includes diverse perspectives. Include:

If you’re using external consultants or similar resources to conduct the assessment, assign clear handoff points and document that knowledge transfer so your internal staff retain some capabilities after any external experts finish their work.

Which frameworks should guide testing?

Choose a recognized framework that covers the six core functions of NIST CSF: Govern, Identify, Protect, Detect, Respond, and Recover. Map each assessment item to those functions and adopt a process model that captures Approach, Deployment, Learning, and Integration (ADLI). Combine automated scans with manual validation and configuration reviews to ensure test results reflect real‑world behavior.

Cybersecurity assessment vs. traditional audit

| Aspect | Cybersecurity assessment | Traditional audit |

|---|---|---|

| Scope | Continuous, business‑focused view of security posture | Static, compliance‑focused snapshot |

| Frequency | Ongoing or quarterly cycles | Typically annual or ad‑hoc |

| Methodology | Repeatable scoring, risk‑based testing, automated scans, and manual validation | Checklist‑driven, control‑centric questionnaires |

| Outcome | Prioritized remediation roadmap with clear owners, sponsors, timelines, and measurable KPIs | Pass/fail compliance report, often lacking actionable remediation detail |

| Business value | Directly ties findings to ROI, budget decisions, and strategic risk reduction | Satisfies regulators but provides limited insight for strategic planning |

| Stakeholder alignment | Engages GRC, security, finance, and exec teams, which aligns with business objectives | Primarily involves compliance/legal teams, with less cross‑functional integration |

| Metrics tracked | Implementation coverage, effectiveness rates, MTTR, financial impact, trend analysis | Control existence/completeness, audit scorecards, exception counts |

| Adaptability | Quickly incorporates new threats, regulatory changes, and technology shifts | Rigid schedule, and updates require a new audit cycle |

| Tooling | Integrated dashboards, automated data collection, and AI‑assisted risk modeling | Manual evidence collection, spreadsheet‑based tracking |

How do you build an asset inventory and map dependencies?

Create a comprehensive list of devices, servers, applications, operational technology, data stores, and cloud services. Prioritize each item by its contribution to core business outcomes. Then, map information flows and supplier relationships to expose single points of failure and to develop realistic threat scenarios.

Report asset inventory metrics that matter for governance, such as the asset registration coverage, the critical systems on approved baselines, and authentication coverage for sensitive servers. Include runbooks, contact lists, and process documents so recovery depends on people and information as well as technology.

How do you identify threats and vulnerabilities?

Use the asset and data flow maps to drive threat modeling that reflects actual business dependencies. Identify likely threat actors, plausible attack paths, and regulatory pressures that shape exposure. Pair technical scans with manual validation to confirm exploitable weaknesses. Verify identity and access controls against the organization’s risk strategy.

Score each scenario by impact and exploitability, then rank them so the most business‑relevant risks rise to the top of the remediation list.

How do you evaluate cybersecurity processes?

Rate your processes using the ADLI model to improve security. For each domain — leadership, strategy, customers, measurement, workforce, and operations — document the method. Verify consistent deployment and maintain the supporting documentation throughout processes. Look for feedback loops and confirm integration with business goals.

Applying ADLI

1. Approach: Describe the documented method and its intended outcome

2. Deployment: Verify consistent application across units and partners

3. Learning: Check for feedback loops, experiments, and knowledge sharing

4. Integration: Confirm alignment with product and operational objectives

For example, a repeatable patching process that runs across the enterprise, is reviewed after incidents, and aligns with product release cycles will score higher than an ad‑hoc approach with minimal documentation.

What technical methods should you use?

Layered testing validates whether documented controls actually reduce risk. Common techniques include:

Plan on scheduling any live testing windows to limit operational impact. Deliverables should include an executive summary, a technical appendix with confirmed findings, and a remediation task list that maps each finding to owners and target dates.

How do you measure maturity and performance?

Combine ADLI process scores with result‑oriented metrics (levels, trends, comparisons, and integration (LeTCI)). LeTCI stands for Levels, Trends, Comparisons, and Integration.

Organizations should apply consistent maturity levels for both process and results evaluations, using the same rubrics across cycles. Teams can automate the collection of repeatable measures where practical so that measurement supports continuous improvement.

Performance categories to track

1. Implementation: Coverage of deployed controls

2. Effectiveness: Indicators such as detection success rates

3. Efficiency: Speed and resource use, for example, mean time to detect (MTTD)

4. Impact: Linkage of cybersecurity outcomes to business results

5. Financial: Spend as a share of the IT budget and costs tied to security events

Automated dashboards can serve executives, while technical owners should receive more detailed reports. Store raw data so analyses can be reproduced and historical trends validated.

How should scoring and prioritization work?

Pair each ADLI process score with its corresponding LeTCI result measure. Rank findings by business impact and exploitation likelihood so remediation focuses on the highest‑value opportunities. Create risk maps that plot impact against likelihood, then translate those visuals into workstreams that deliver the greatest risk reduction based on available resources.

Simple visualizations help decision makers, and detailed reports guide implementers. Link each workstream to the organization’s budgeting cycle to secure funding alignment.

How do you convert findings into action plans?

Translate each prioritized finding into a measurable initiative. For every remediation item, define:

Cost‑benefit checks inform prioritization, while governance structures ensure sponsors own outcomes and teams execute tasks. Include monitoring artifacts and handoffs to preserve institutional knowledge. Tracking tools should display status, blockers, and metric deltas so sponsors can verify progress and reallocate resources as needed.

How do you execute remediation and validate results?

Allocate people, budget, and tracking tools to carry out remediation. Routine status reports should contain metric updates, milestone completion, and risk re‑evaluation. After remediation, run targeted tests and process checks to confirm control performance against stated goals. Maintain a short feedback loop between operations and the assessment team so your risk register reflects current reality. Adjust priorities when material changes occur in the threat environment or internal operations.

How do you measure and evaluate ongoing progress?

Use Key Performance Indicators (KPIs) and the LeTCI approach to track progress against remediation objectives. Trend data, financial metrics, and KPIs demonstrate program value and support future resource requests. Verify that improvements lower residual risk and that efficiency gains appear where expected.

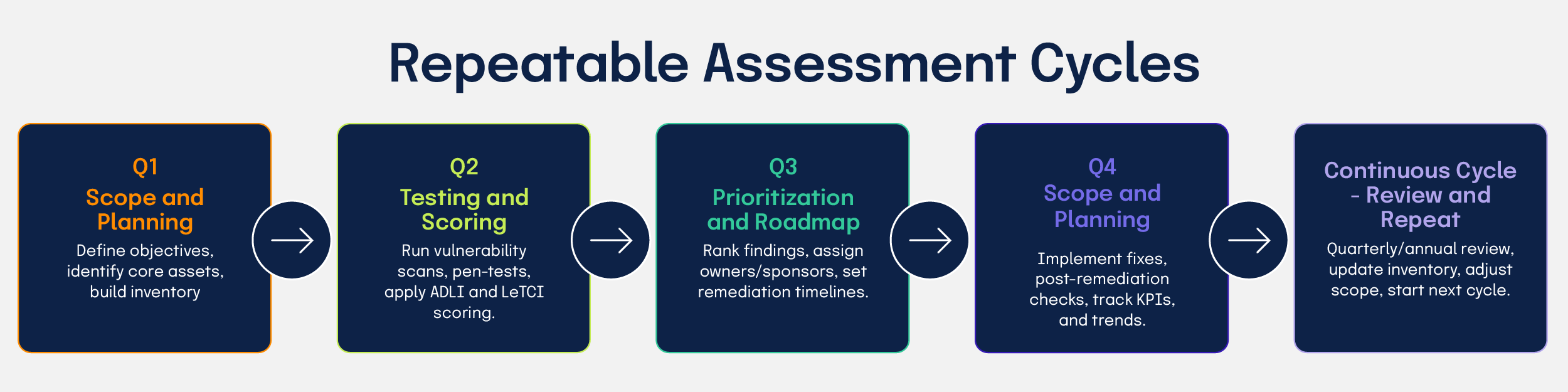

How do you establish repeatable assessment cycles?

Embed assessment activities into governance with a repeatable cadence: define scope, capture context, evaluate processes and results, prioritize actions, implement remediation, and measure progress. Balance depth and frequency so operational burden remains manageable while coverage stays relevant. Incorporate feedback to refine questions and rubrics as the organization learns and regulatory requirements evolve. Schedule executive reviews at regular intervals and set thresholds that trigger deeper assessments.

Practical notes on tools and implementation

Automation and crosswalks between frameworks help to reduce manual effort and create auditable evidence trails. Use a framework as your assessment lens and technical techniques to validate control behavior. Produce crosswalk documentation that links requirements to test methods, avoiding duplicate work. Where automation is feasible, collect data automatically so human effort focuses on interpretation. Define knowledge‑transfer expectations when external assessors are engaged.

What deliverables should each assessment produce?

A complete assessment yields actionable outputs:

Store all findings and evidence in an accessible format so teams can reproduce results and validate past decisions.

A disciplined cybersecurity assessment connects scoping, asset mapping, process maturity evaluation, and technical validation into a prioritized remediation plan and measurable progress path. Merging ADLI process rubrics with LeTCI result metrics provides a unified view of maturity that supports risk‑based investment decisions and governance reporting.

Consistent measurement and accountable remediation activities enable the continuous reduction of enterprise risk.

See Hyperproof in Action

Related Resources

Ready to see

Hyperproof in action?