GUIDE

A Guide to AI Risk Management Frameworks:

What They Are and How to Use Them

Introduction

The capabilities of artificial intelligence are racing forward, and businesses are right to try to harness AI for greater efficiency, productivity, and growth. This also means, however, that the risks of misusing AI are racing forward as well. Businesses must develop effective, sustainable systems to manage those risks, and so far, that is no easy task. Consider a few findings from Hyperproof’s 2024 IT Risk and Compliance Benchmark Report:

80%

say AI strategy is important for their teams’ operations

59%

are concerned or very concerned about the business risks that AI usage might bring

Only

18%

have aligned their compliance and risk activities

In other words, while companies want to embrace the benefits of artificial intelligence, they aren’t sure how to do so wisely. They need guidance to identify what the risks of AI usage are and then how to implement effective policies, procedures, and other controls to keep those risks at appropriate levels. Without clear AI risk management frameworks, teams are forced to make ad-hoc decisions about AI that are hard to explain to executives, regulators, or customers.

That guidance comes in the form of frameworks — numerous frameworks that can help compliance officers and IT executives meet their risk management and regulatory compliance objectives. This guide explores what those frameworks are, how to choose the right one, and what benefits you’ll be able to reap once you’ve implemented the right AI frameworks the right way. By standardizing how you identify, analyze, and treat AI-related risks, AI risk frameworks give you a practical playbook to move from experimentation to safe, production-ready systems.

How an AI risk management framework can help your organization

Frameworks serve as a scaffolding upon which you can build your business processes and guide those processes toward the goals you want to achieve. Each business will still implement its system of processes and controls, depending on its unique risks and operations; but all businesses that follow a framework will (ideally) develop the same fundamental capabilities and insights that they can then use to achieve operational, financial, and compliance objectives. A well-chosen AI risk management framework turns that scaffolding into something actionable, translating high-level principles into concrete steps your teams can follow when they design, test, and roll out AI systems.

More specifically, frameworks help your company to:

Of course, frameworks are not a new idea. For over 20 years, corporate finance teams have used frameworks (typically the COSO framework for effective internal control) to strengthen financial reporting and demonstrate compliance with the Sarbanes-Oxley Act. Globally, businesses have used ISO standards as frameworks to improve information security, quality management, and more.

Artificial intelligence is simply the next step. Already, numerous groups have developed AI-specific frameworks that any organization could use.

AI frameworks that exist today

As compliance officers, CISOs, and internal auditors work with technology teams to develop AI systems, several frameworks already exist to help your organization create safe, secure, and reliable systems.

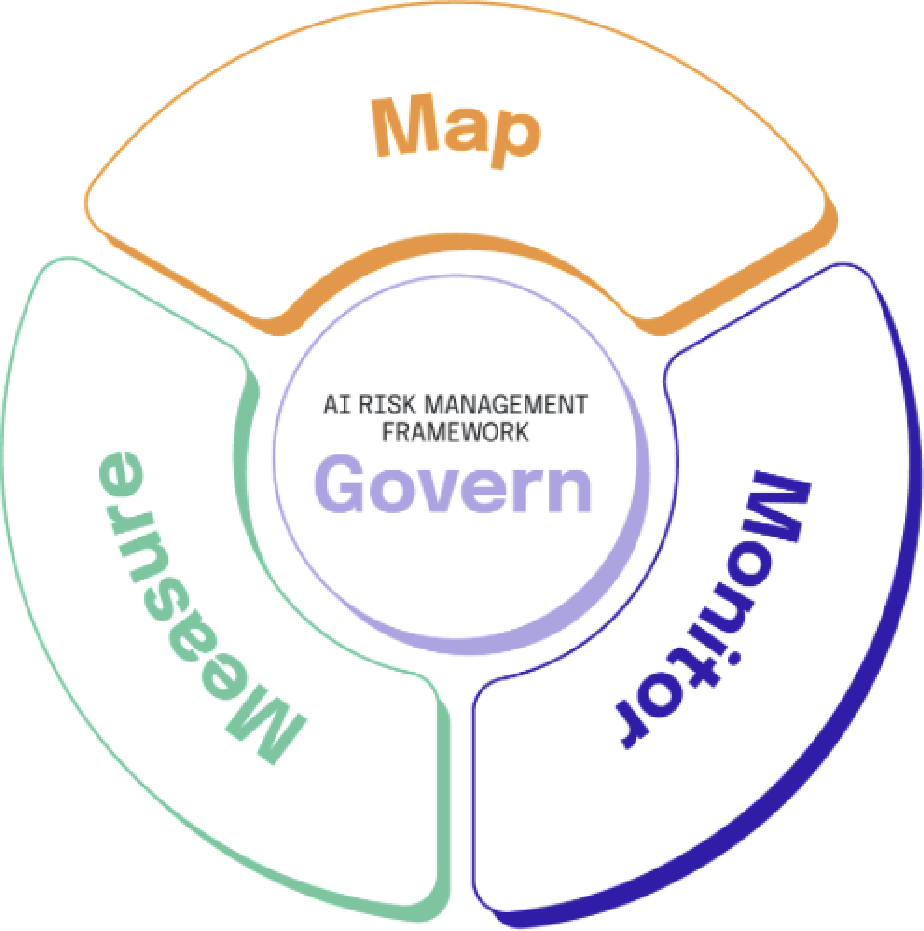

NIST AI Risk Management Framework

The U.S. National Institute of Standards and Technology (NIST) released its AI Risk Management Framework in January 2023. The framework is intended to be useful for organizations of any size and industry; it focuses more on core capabilities that an organization should have to govern artificial intelligence rather than on specific internal controls that an organization should implement. This artificial intelligence risk management framework is intentionally technology-neutral so it can be applied to both traditional machine-learning models and newer generative AI systems.

For example, the AI framework defines four core functions:

The framework then defines several “categories” for each of the four functions and then several sub-categories for each category. Each instance gives more specific examples of the capabilities you should have to manage your AI development appropriately.

Guide

For an in-depth dive into the NIST AI RMF, check out our free resource, Navigating the NIST AI Risk Management Framework: The Ultimate Guide to AI Risk

Aligning AI Risk Management With NIST’s AI RMF

If you’re building an artificial intelligence risk management program, you’ll need a solid AI security framework and AI risk management software that map back to a recognized standard. Our in-depth guide to the NIST AI Risk Management Framework (AI risk management) walks through how to structure and operationalize these controls in practice.

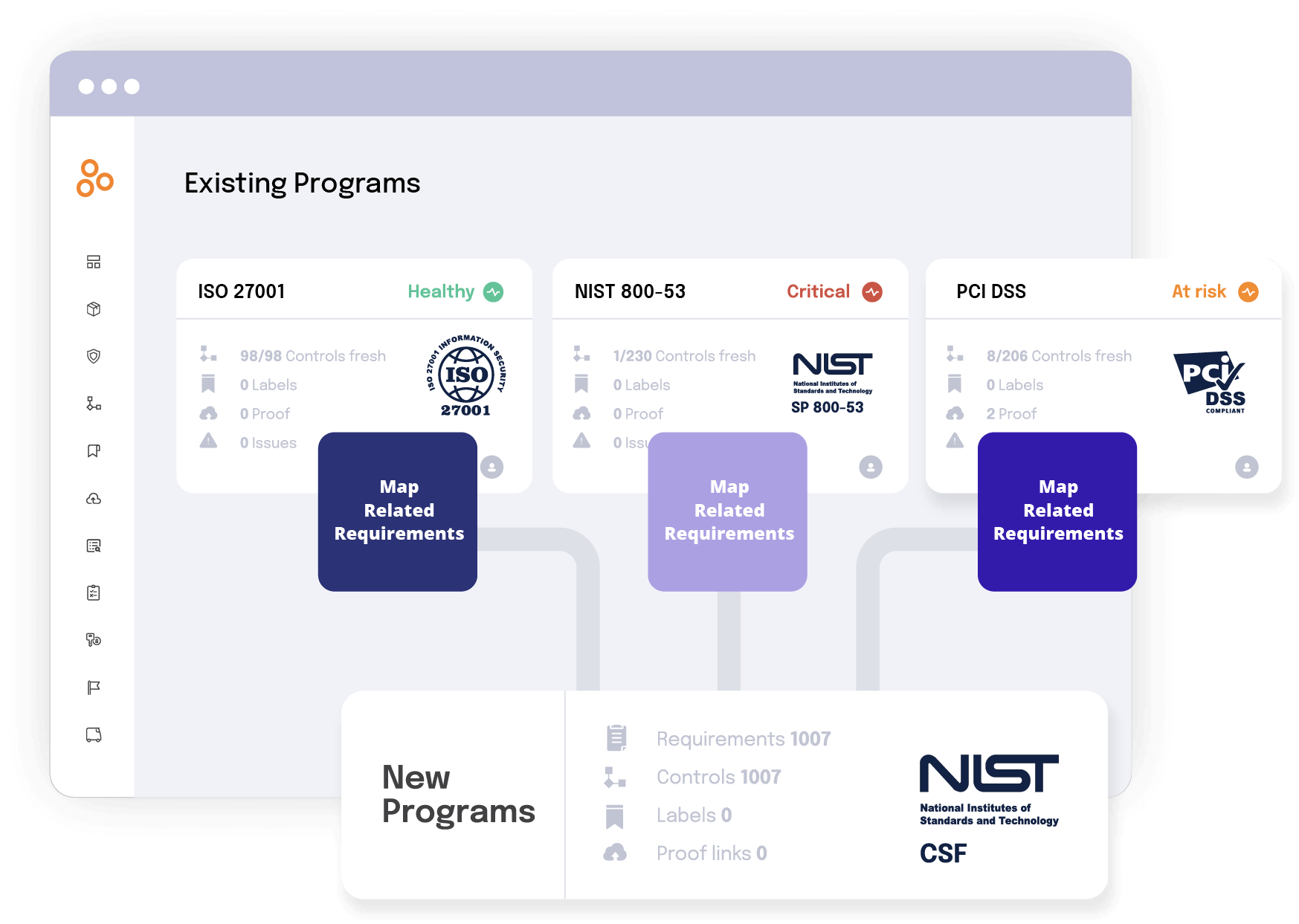

Using the NIST AI RMF in Hyperproof

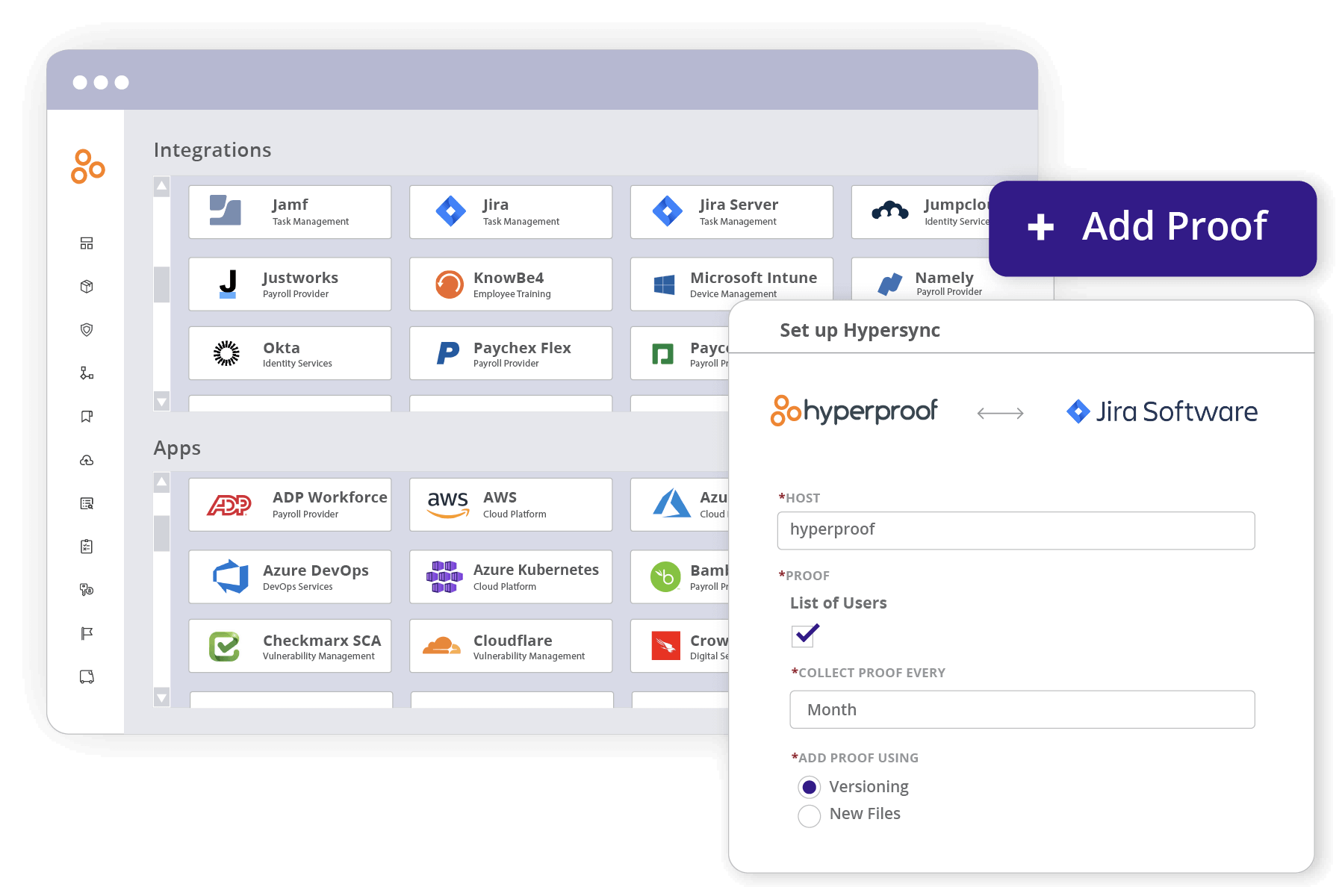

Hyperproof helps you manage day-to-day compliance operations, eliminating tedious, repetitive tasks like manual evidence collection and control assessments. Hyperproof’s powerful risk management platform automates evidence collection and continuously monitors your controls to ensure your organization is protected against AI risk.

NIST Cybersecurity Framework (CSF)

The NIST Cybersecurity Framework (CSF) is a broader framework that organizations can use to guide their cybersecurity development. The most recent version, published in February 2024, has the same basic structure as the AI framework. However, the CSF defines six core functions rather than four and focuses on cybersecurity rather than artificial intelligence. Together, NIST CSF and the NIST AI RMF have become two of the most influential AI system management frameworks USA enterprises rely on when building secure, compliant AI programs.

Why use a cybersecurity framework to help with your artificial intelligence risks? First, because cybersecurity is one of the most serious threats to the successful use of AI; the more you can develop (and maintain) a robust cybersecurity posture, the more smoothly your AI projects will proceed. Second, many of the capabilities defined in the CSF overlap with those in the AI framework. Embracing the CSF early will leave you in a better position to embrace the AI framework later.

Guide

For an in-depth dive into NIST CSF, check out our free resource, The Ultimate Guide to NIST CSF

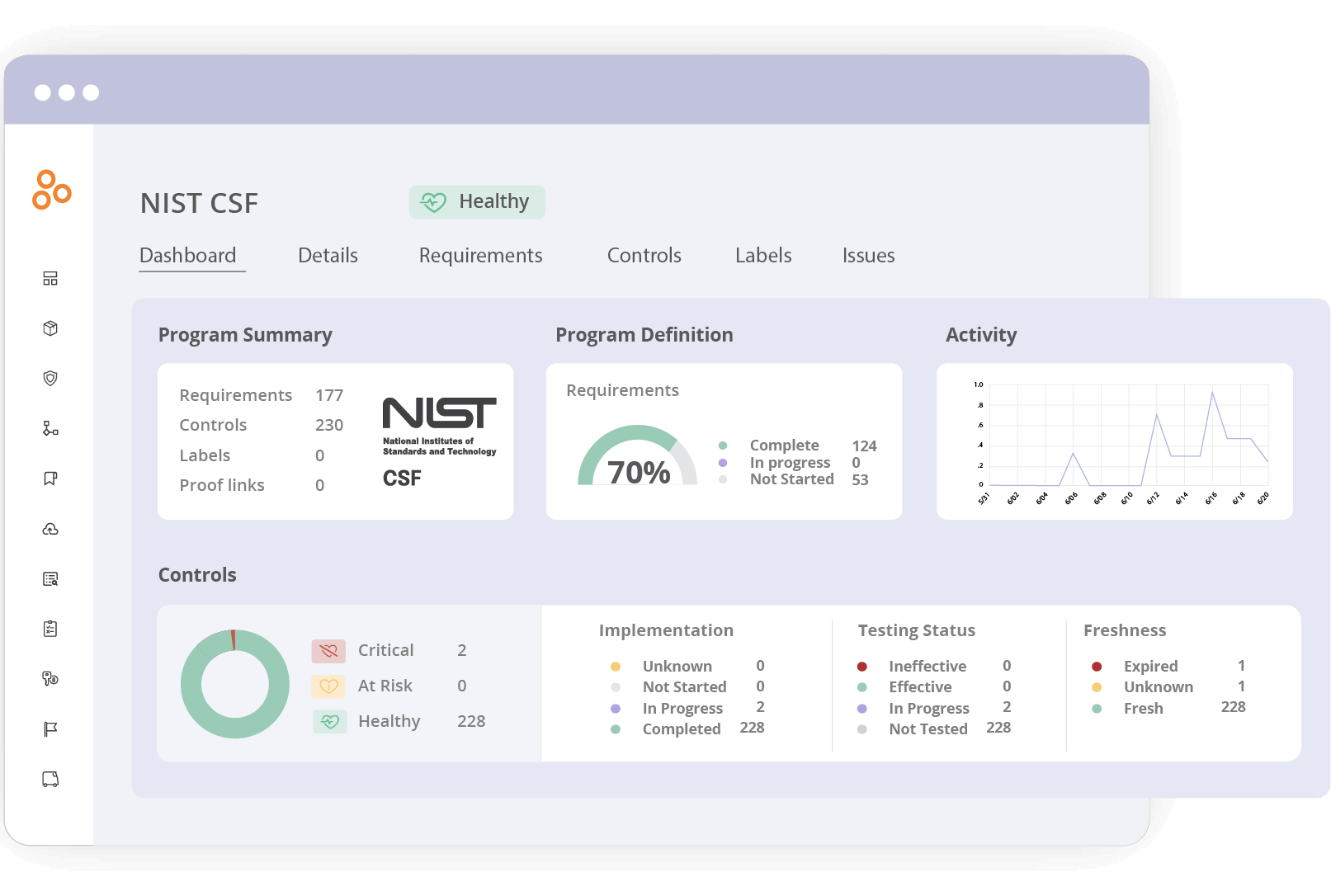

Using NIST CSF in Hyperproof

Hyperproof’s compliance operations platform makes it easy for organizations to see how their current security program and activities stack up against the recommended activities in the NIST CSF and identify areas for improvement. Hyperproof comes with project management features that help security assurance professionals manage security continuously, including assigning control testing tasks to business unit operators, managing remediation projects, and collecting evidence of controls’ effectiveness. Hyperproof also facilitates automated controls monitoring, and our built-in dashboards help organizational leaders understand how their security efforts are helping mitigate risks and satisfy regulatory requirements. These capabilities also complement broader AI security frameworks that emphasize identity management, data protection, incident response, and ongoing monitoring of AI workloads.

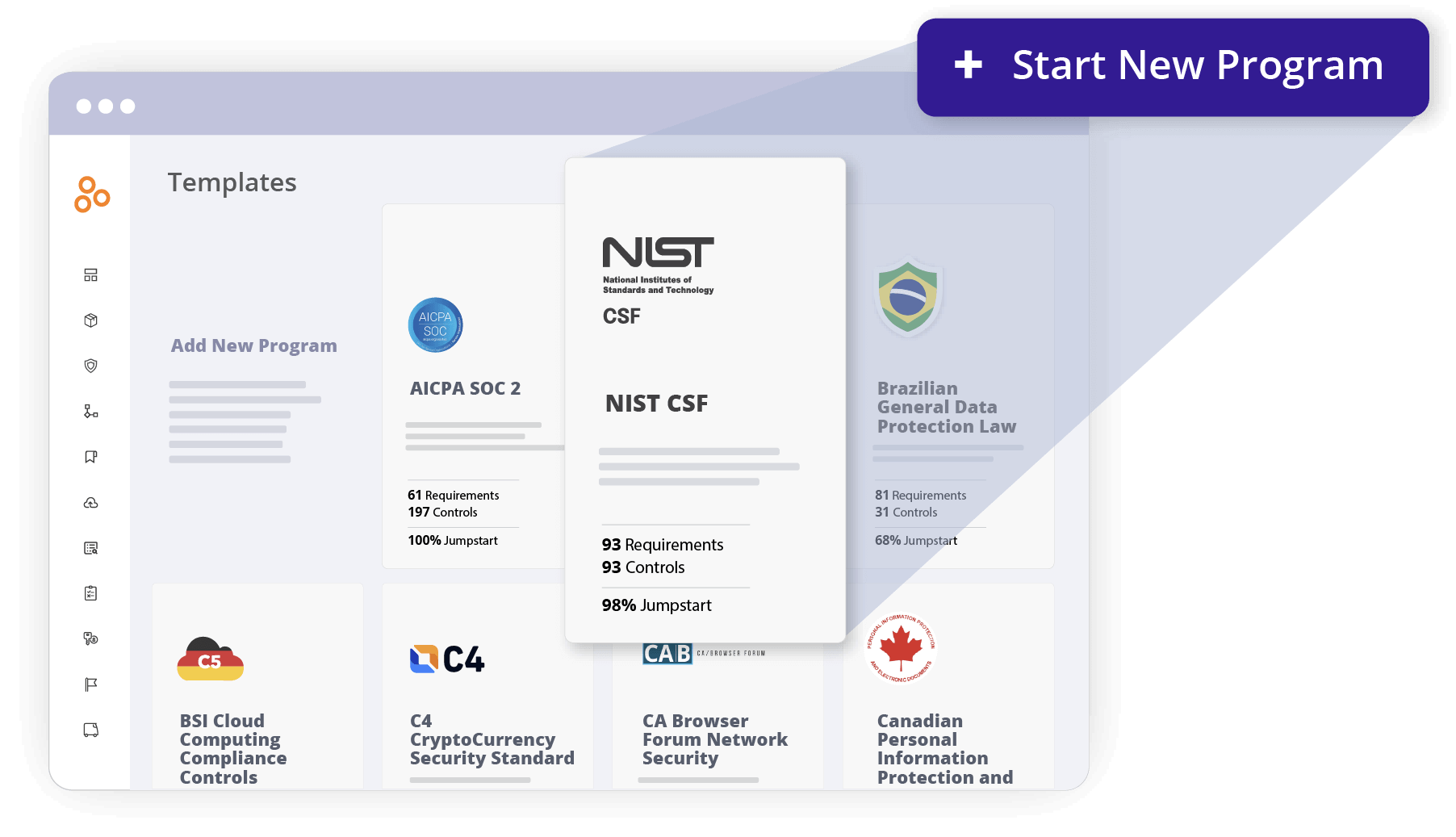

Get an out-of-the-box NIST CSF 2.0 framework template

Leverage Hyperproof’s NIST CSF template, which includes an updated version for 2.0, recommended security actions and recommended controls that provide a starting point to meet your organization’s unique needs.

Quickly collect evidence for NIST CSF

Automate evidence collection and link evidence to requirements and controls with dozens of integrations to ensure your proof is always up-to-date.

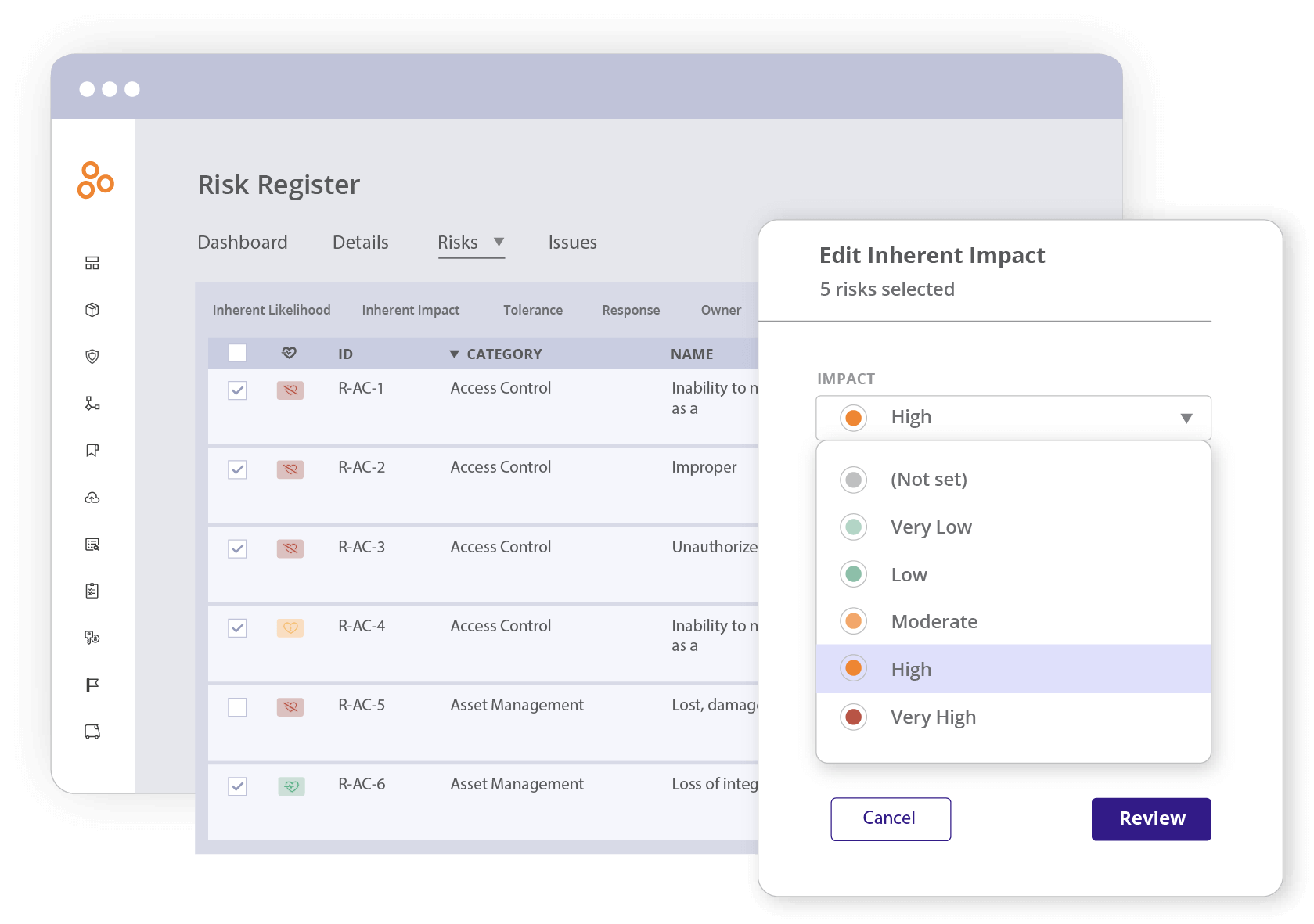

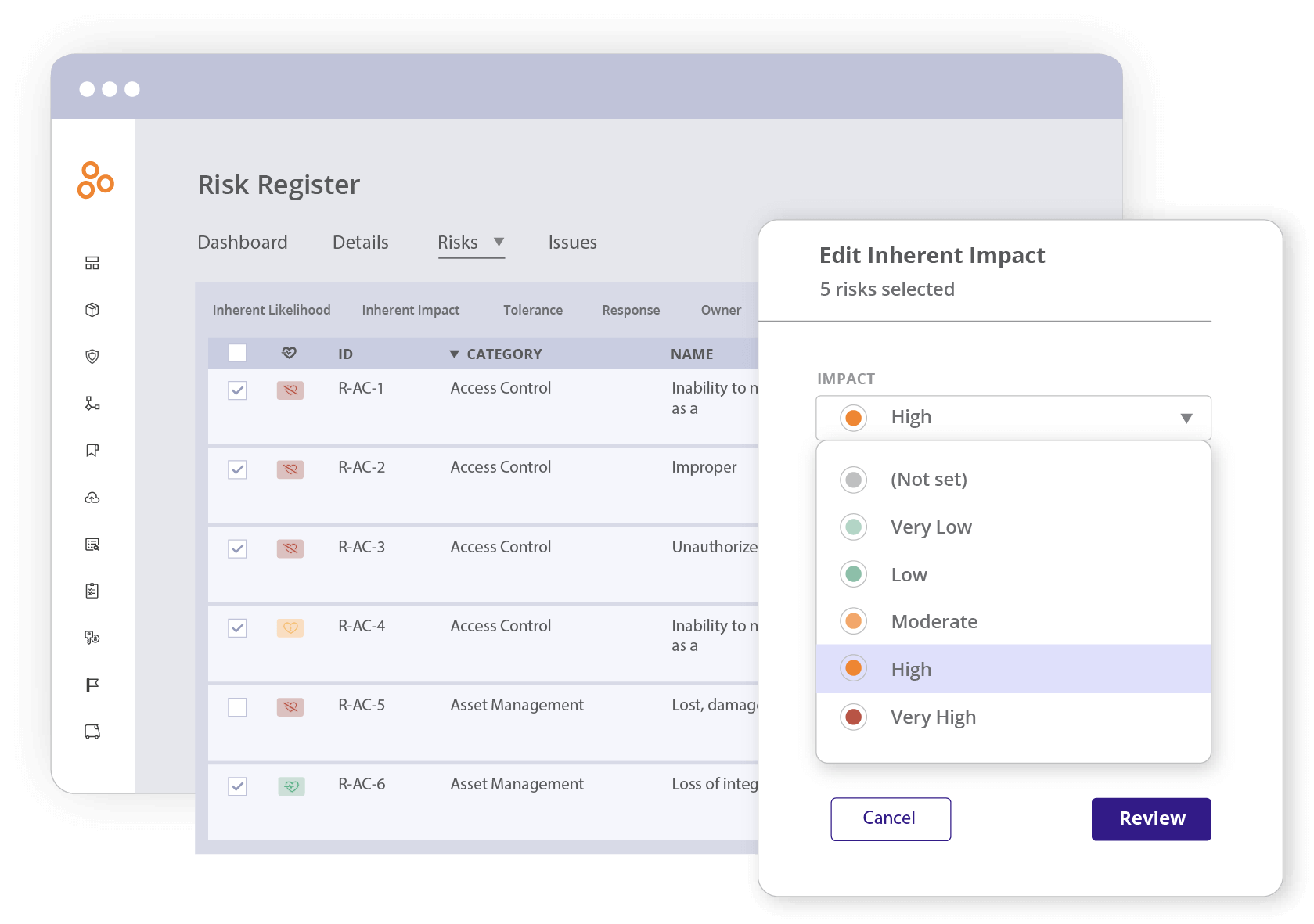

Collect and view your risks in a single place

Hyperproof’s risk register enables risk owners to consistently document the results of risk assessments, enabling leaders to better manage resources and prioritize mitigation activities.

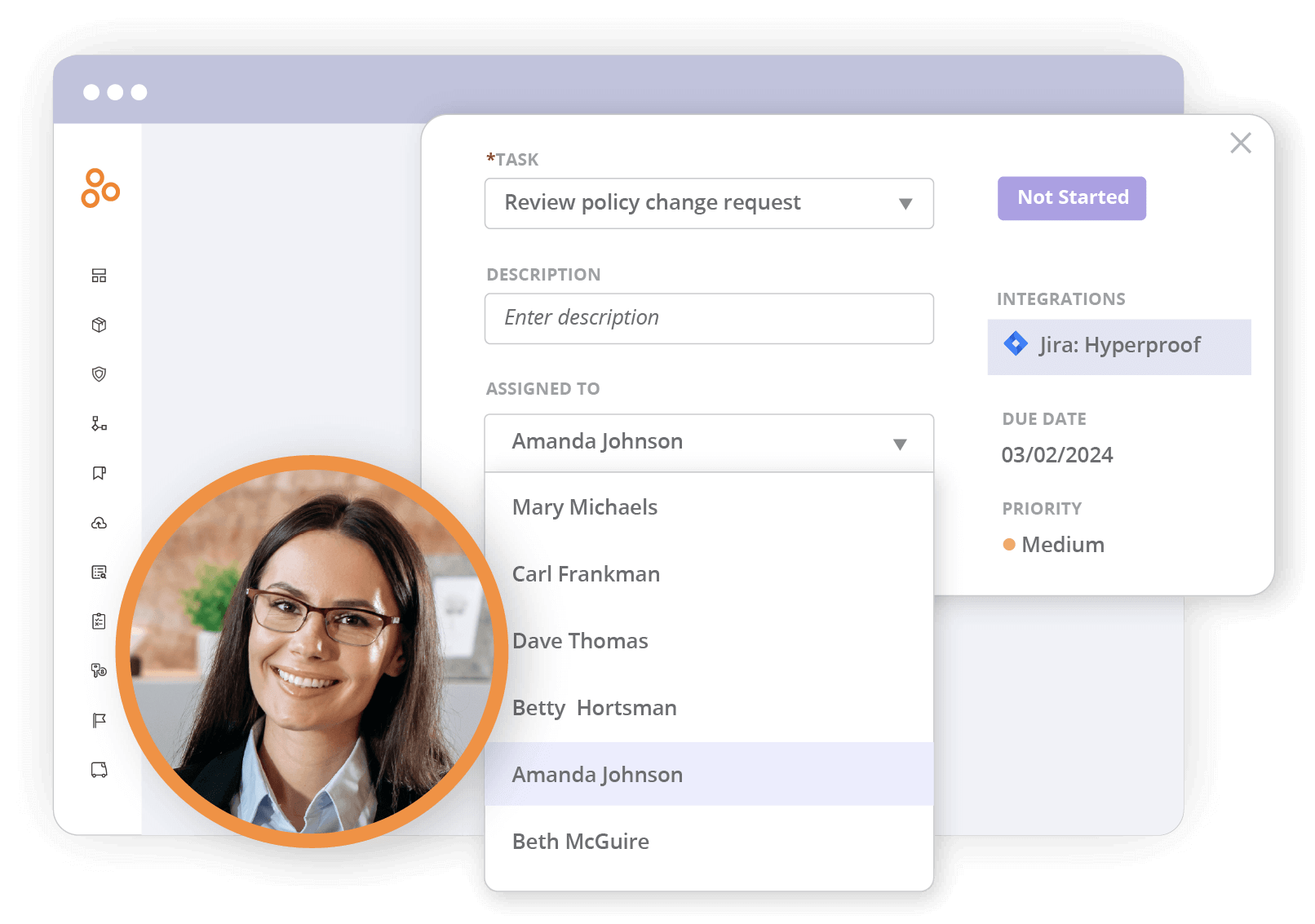

Easily assign tasks to NIST CSF framework participants

Ensure the work gets done by automating task assignments and reviewing workflows within the platform to maximize the output of your team so you never have to worry about delays.

Understand your compliance posture at a glance

Understand how your team is progressing with dashboards and reporting that can be shared with key stakeholders.

Reuse your NIST CSF work to satisfy other frameworks

Use Hyperproof’s Jumpstart feature to map your existing NIST CSF controls across multiple frameworks like ISO 27001, NIST SP 800-53, and PCI DSS so you can quickly add new frameworks.

ISO 42001

The International Organization for Standardization released ISO 42001: Artificial Intelligence Management Systems in 2023. The framework is longer than its NIST counterpart. It includes four “annexes” that offer specific controls, objectives, and implementation guidance that you might want to follow with your own AI project.

Overall, however, ISO 42001 tries to tackle more fundamental questions about developing and deploying AI. For example, how should you plan your project, including objectives to be set and changes you might need to make? How do you conduct an AI risk assessment? How should you evaluate the performance of the AI system? The standard addresses all these questions and many more, and gives you a blueprint for organizing your AI governance business documents — from policies and risk assessments to system impact reports — around a formal AI management system.

Using ISO 42001 in Hyperproof

Hyperproof makes it easy for you to manage AI risk by providing an out-of-the-box ISO 42001 framework template so you can start managing AI risk quickly and effectively. Once you have ISO 42001 implemented, the Hyperproof platform provides insight into how your ISO 42001 controls map to other similar frameworks like ISO 27001 and NIST CSF. Hyperproof also has project management features to help you continuously monitor, collect evidence, automate control testing, and assign control testing tasks. Managing remediation projects is seamless, and our built-in dashboards help organizational leaders understand how their security efforts are helping mitigate risks and satisfy regulatory requirements.

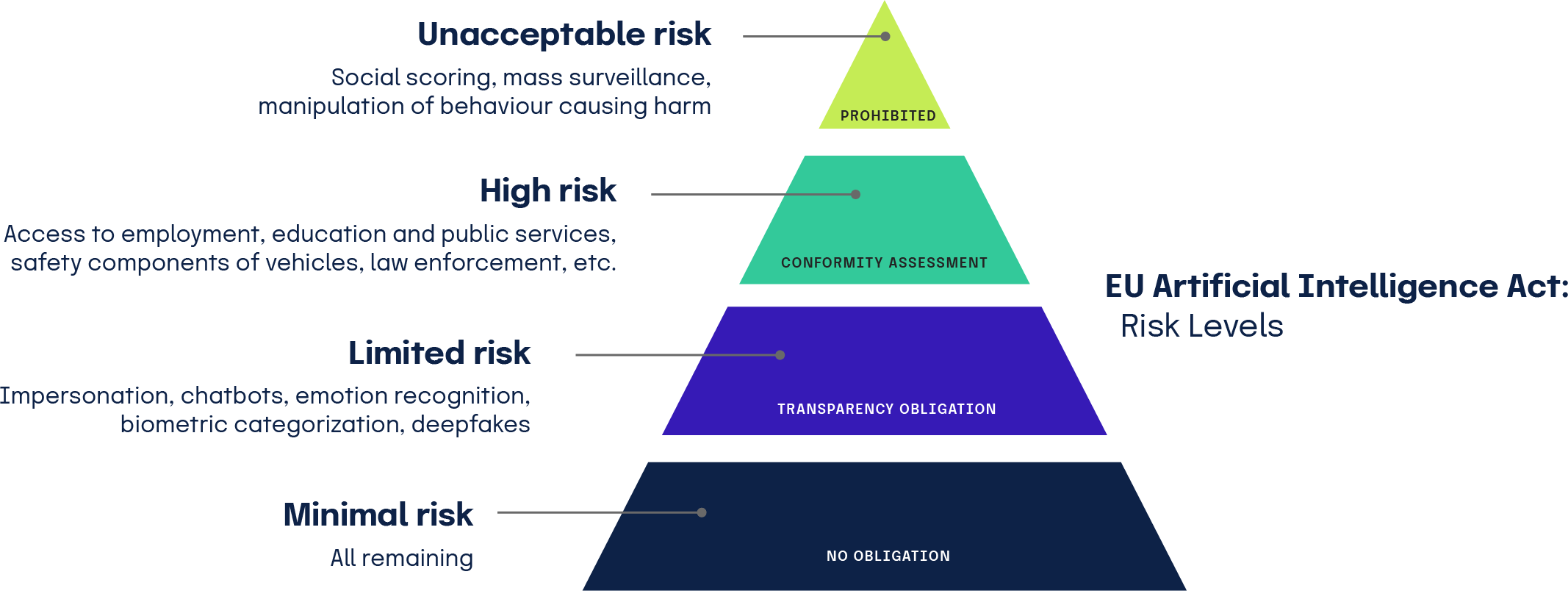

European Union AI Act

The EU AI Act, adopted in December 2023, is a legal framework rather than a regulatory one. That is, the EU AI Act is a law that defines how organizations are allowed to develop AI and how the field will be regulated, even though many of those supporting regulations haven’t been finished yet. Regardless, the EU AI Act itself is still a useful blueprint for AI development.

The law categorizes AI usage by levels of risk. Some uses of AI, such as governments using AI to generate some sort of “good citizen” score, are classified as “unacceptable” and, therefore, illegal. Other applications, such as using AI to run critical infrastructure or medical devices, have the lower classification of “high risk.” This means they are allowed, but businesses developing or using those AI systems must meet rigorous standards for risk assessment, data validation, activity logs, and the like.

Japan’s AI Guidelines for Business

In April 2024, Japanese regulators released a set of voluntary guidelines for how businesses should develop and use AI. While relatively short, the guidelines still hit on many of the same themes cited by U.S. and European regulators: eliminating the threat of bias in AI processing, the need for strong security and privacy protections, transparent disclosure of when people are interacting with an AI system, and so forth.

NIST also published “crosswalk” guidance in April 2024 to show where its AI risk management framework does and does not overlap with Japan’s guidelines

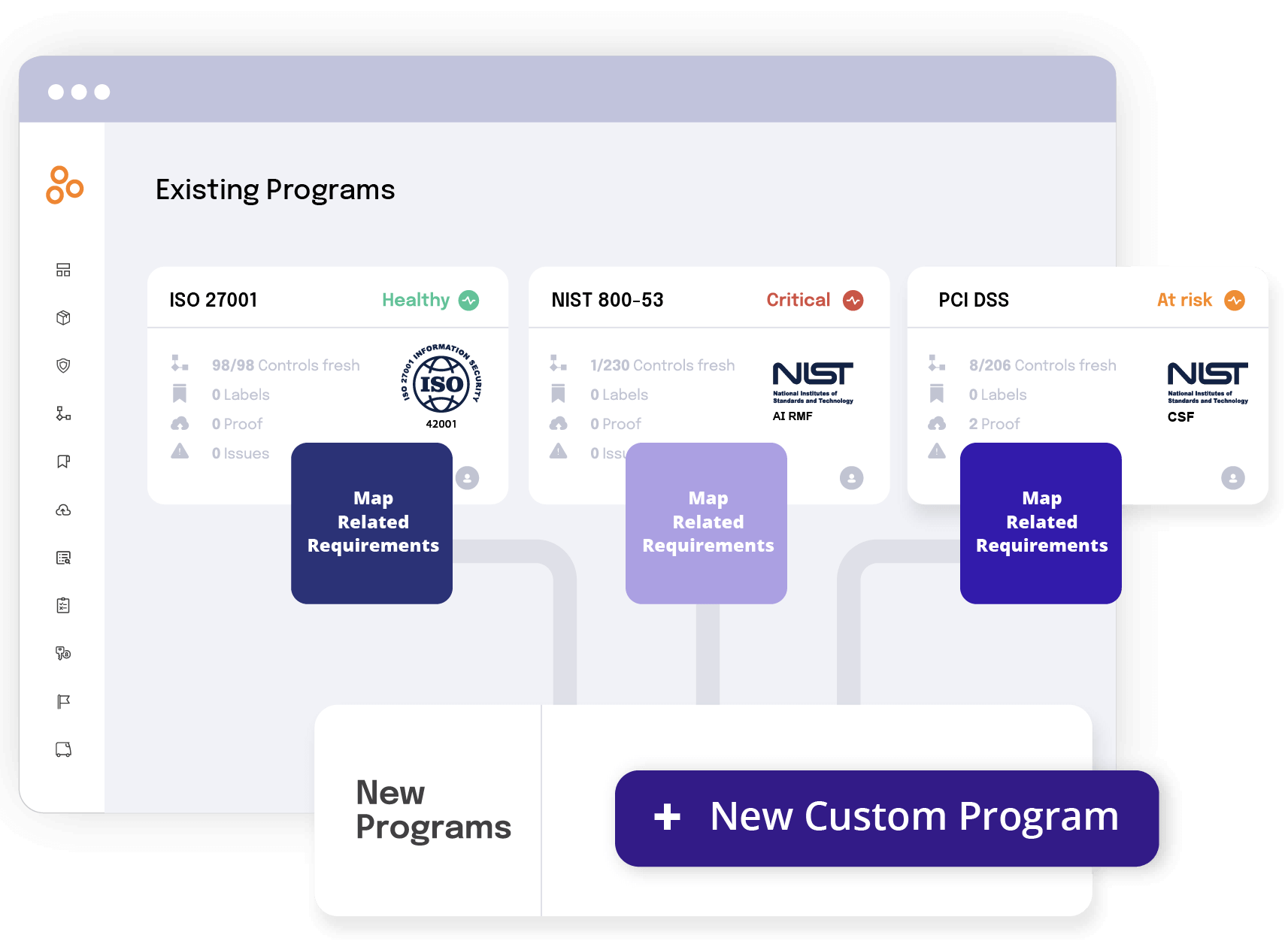

Custom frameworks in Hyperproof help

you manage AI risk

While Hyperproof does not have an out-of-the-box framework template for the EU AI Act or Japan’s AI Guidelines for Business, they are both available as custom frameworks. Bringing a framework into Hyperproof is as simple as uploading a .CSV file. Once the custom program is in Hyperproof, you can modify any of the program requirements and/or controls and access native Hyperproof features to run your program.

Choosing the right framework for your

organization

In Hyperproof’s 2024 IT Risk and Compliance Benchmark Report, 32% of respondents said they expect to implement an additional framework to help them mitigate the business risks associated with artificial intelligence (and 40% said they plan to modify controls in an existing framework). So, what are the best ways to choose the right framework for your business? Many organizations experiment with several AI risk assessment frameworks before settling on the mix that best fits their industry, risk appetite, and regulatory environment.

This is where a GRC platform can help compliance officers enormously. When you’re evaluating modern compliance platforms enterprise-scale risk workflows, pay close attention to how they support mapping frameworks, assigning owners, and orchestrating remediation across teams.

The right platform, with the right capabilities, can help in the following ways:

In the end, the platform should also provide a complete trail of the work, so you can provide that documentation to auditors, regulators, the board, or anyone else who needs to see it.

What well-governed AI should look like

Regardless of the specific framework you might use to manage your adoption of artificial intelligence, the result should be the same. You should end up with a compliance program that is both robust and responsive.

Robust

Your compliance program is strong and resistant to attempts to circumvent it — and rest assured, people will attempt to circumvent your policies, procedures, and controls to manage AI risks. A robust program will work day in and day out to keep your AI systems operating in a reliable, compliant, risk-aware manner.

Responsive

Your program will be able to accommodate new risks and regulations as they arise because new issues will arise too. A responsive compliance program will use frameworks as guideposts to help you implement new policies, procedures, and controls (or amend existing ones) as your business — and its attendant risk profile — evolves.

In the final analysis, the right AI framework will help you reduce risks to an acceptable level and manage the residual risks of AI, which, let’s face it, will never go away. You’ll still have the clarity and assurance you need for the board and other stakeholders as we continue to push into our new AI world.

Download the PDF