The Dual Edges of AI in Cybersecurity: Insights from the 2024 Benchmark Survey Report

Artificial intelligence (AI) in cybersecurity presents a complex picture of risks and rewards. According to Hyperproof’s 5th annual benchmark report, AI technologies are at the forefront of both enabling sophisticated cyberattacks and bolstering defenses against them. This duality underscores the critical need for nuanced application and vigilant management of AI in cybersecurity risk management practices.

This blog offers a high-level overview of our findings from chapter 2 of the report, centered on AI. Download the full report for a more detailed exploration and to access additional chapters.

AI as a catalyst for cybersecurity and business risk

AI represents a double-edged sword in the world of cybersecurity. From acting as a spear for threat actors to better attack companies to helping defend these same organizations, the topic of AI has been polarizing and nuanced in cybersecurity.

AI’s dual capability presents a unique challenge – and opportunity – for Governance, Risk, and Compliance (GRC) professionals. On the one hand, AI technology can streamline workflows, enhance detection mechanisms, and provide predictive insights that can preempt cyber attacks. On the other hand, introducing AI into business operations can also bring about new vulnerabilities.

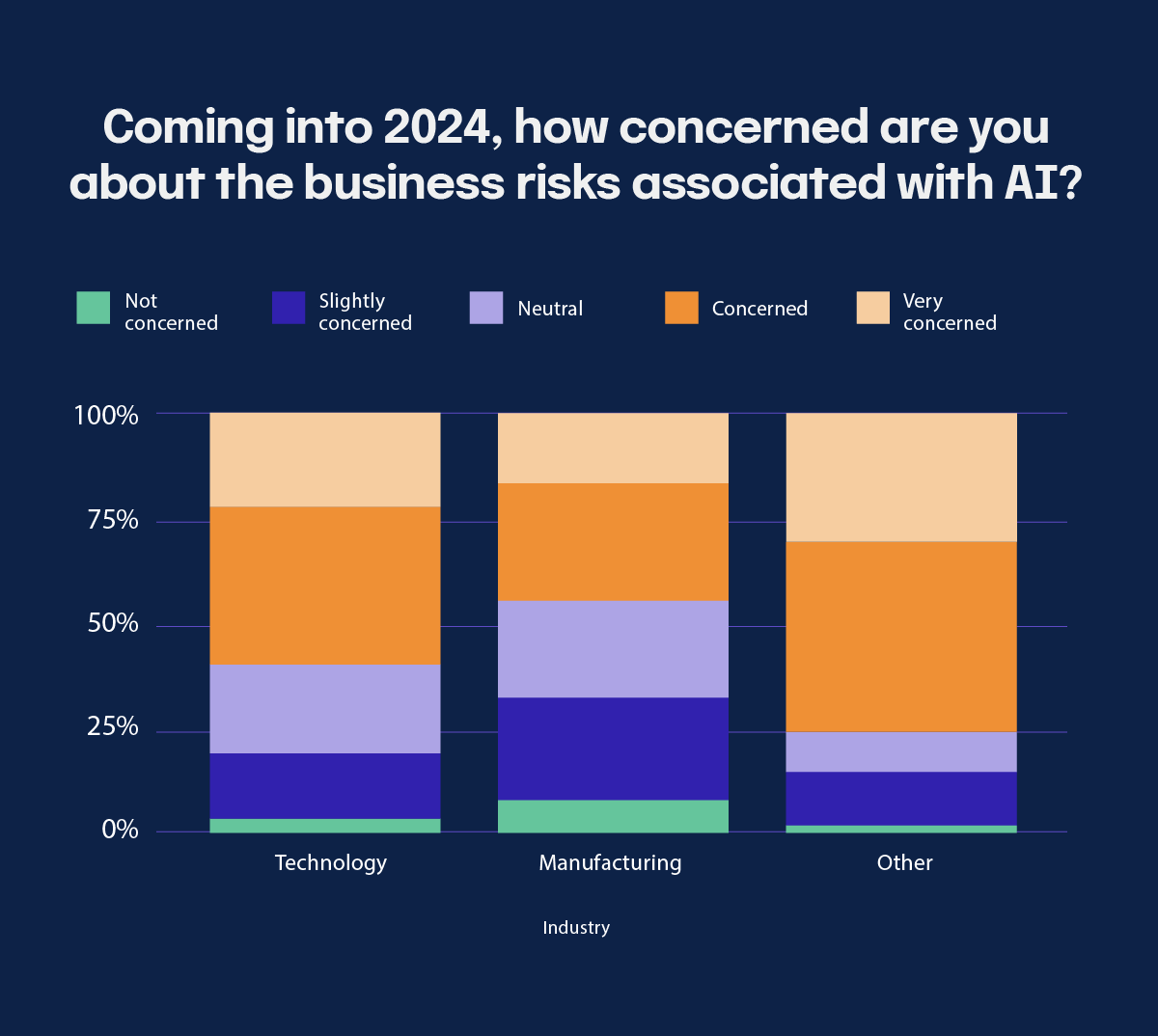

Examples include more advanced phishing schemes and AI-driven password-guessing techniques facing the global market. According to our 2024 IT Risk and Compliance Benchmark Report, 39% of survey respondents are wary of the business risks posed by generative AI technologies, with 22% expressing extreme concern.

This apprehension is counterbalanced by the optimistic 61% who leverage AI to streamline control recommendations and 59% who use it to assist in reviewing documentation. Strategically implementing AI can not only augment the efficiency of security measures but also necessitate a new level of vigilance and adaptive security strategies to mitigate the risks introduced by these advanced technologies.

Global regulatory response and the necessity of AI frameworks

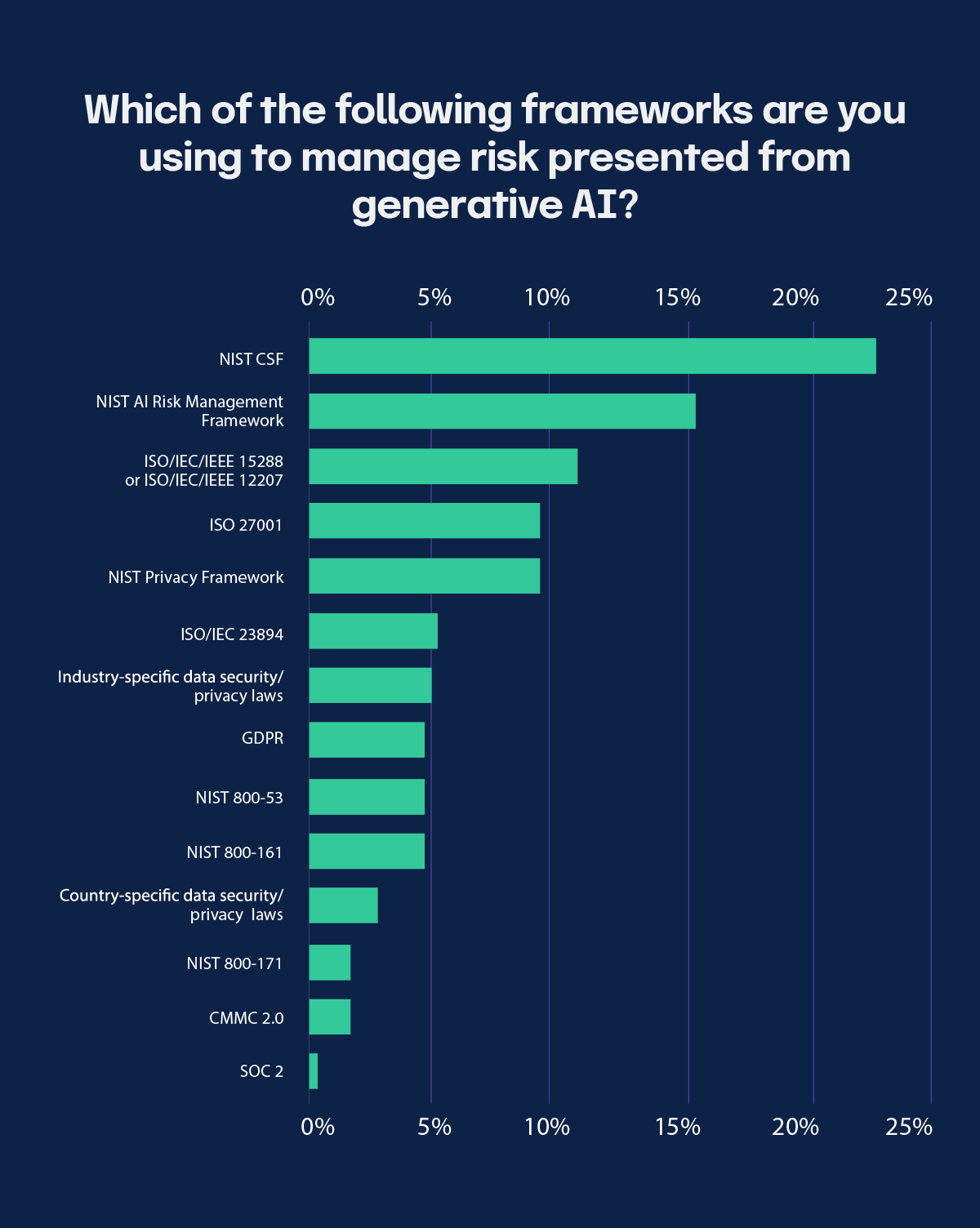

The global regulatory environment is quickly adapting to the challenges and opportunities presented by AI in cybersecurity. As AI technologies evolve, so does the need for robust regulatory frameworks that can address the complex cybersecurity, privacy, and ethical implications of AI. 23% of our survey respondents use NIST Cybersecurity Framework (CSF) to manage AI risk, making it the most commonly used AI framework.

In response to the burgeoning AI challenges, the NIST AI Risk Management Framework (RMF), released in early 2023, has already become the second most commonly used framework for AI.

16% of those surveyed use NIST AI RMF to tackle generative AI risk. This framework provides structured guidance on managing risks posed by AI technologies to individuals, organizations, and society. Other top frameworks used in response to the risk presented by generative AI include ISO 15288 or ISO 12207 (12%), ISO 27001 (9%), and the NIST Privacy Framework (9%).

As regulatory bodies worldwide continue to refine and introduce new guidelines, organizations must remain agile, ensuring their AI deployments are transparent, accountable, and aligned with existing and emerging regulations to foster trust and ensure compliance.

Industry-specific AI concerns and practices

AI risk concerns vary significantly across sectors, particularly in highly regulated industries like aviation, banking, FinTech, and health tech. These sectors report higher levels of concern due to the direct impact AI can have on critical operational aspects and the strict regulatory environments in which they operate.

For instance, in banking and FinTech, AI poses both an opportunity to enhance customer experience and a risk in terms of financial security and data privacy. The 2024 IT Risk and Compliance Benchmark Report reveals that 44% of respondents in the banking and FinTech industries are “concerned,” and 31% are “very concerned” about AI risks.

These numbers reflect the complex balance organizations must maintain by leveraging AI for competitive advantage and managing potential threats to avoid costly disruptions and ensure regulatory compliance.

Strategic responses to AI challenges

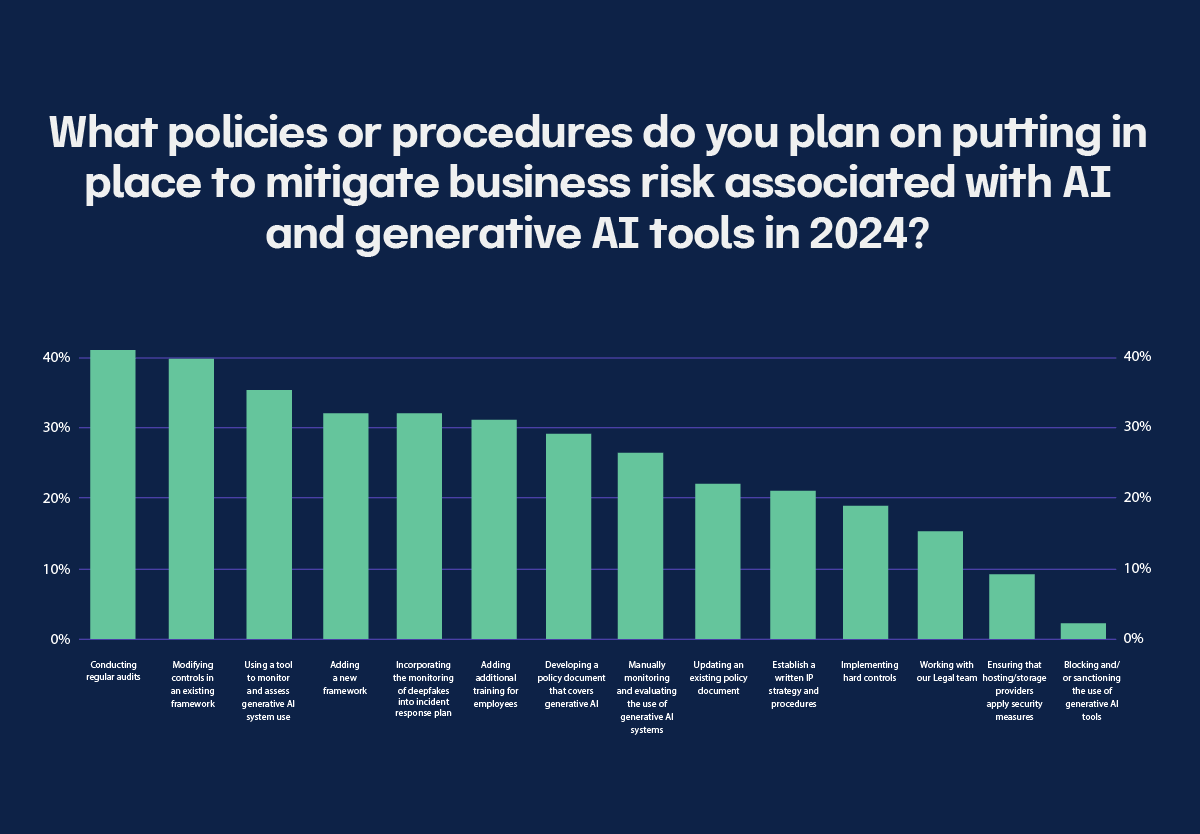

In light of the evolving AI landscape, organizations are re-evaluating their cybersecurity strategies to incorporate AI capabilities while addressing the associated risks. Many organizations are now prioritizing regular internal audits and revising their existing control frameworks to better align with the AI-driven threat landscape.

An impressive 80% of survey respondents view their AI strategy as a crucial component of their operational planning, highlighting the growing recognition of AI’s role in enhancing cybersecurity posture. These strategic adaptations are not merely reactive measures but are part of a broader, proactive approach to cybersecurity that integrates AI as a core element of the defense strategy. This enables organizations to not only defend against but also anticipate potential cyber threats more effectively.

41% of respondents plan to conduct regular audits to mitigate business risk associated with AI tools in 2024. Additionally, 40% plan to modify controls in an existing framework to accommodate AI into their risk management program.

Another 35% plan to use a tool to monitor and assess generative AI system use, while 32% plan to add an additional framework. This last point is particularly interesting since 32% of respondents also reported postponing adding additional frameworks due to time spent on other manual day-to-day tasks.

At the other end of the spectrum, only 3% plan to block or sanction the use of generative AI tools within their organizations. This demonstrates that accepting the usage of AI is likely a business imperative for many, as it gives companies an opportunity to further streamline their operations, allowing for a more efficient workforce.

Optimizing GRC with AI amid economic shifts

The current economic climate has imposed constraints that prompt organizations to seek more efficient ways to manage GRC. AI is increasingly viewed as a critical tool, offering the potential to automate routine tasks, streamline data analysis, and optimize regulatory compliance processes. This shift is particularly relevant as organizations face pressure to do more with less, making AI an attractive option for enhancing GRC operations.

According to our survey results, automation and AI-driven analytics are playing pivotal roles in transforming GRC workflows, allowing GRC professionals to focus on strategic risk management and compliance activities that require more nuanced human judgment.

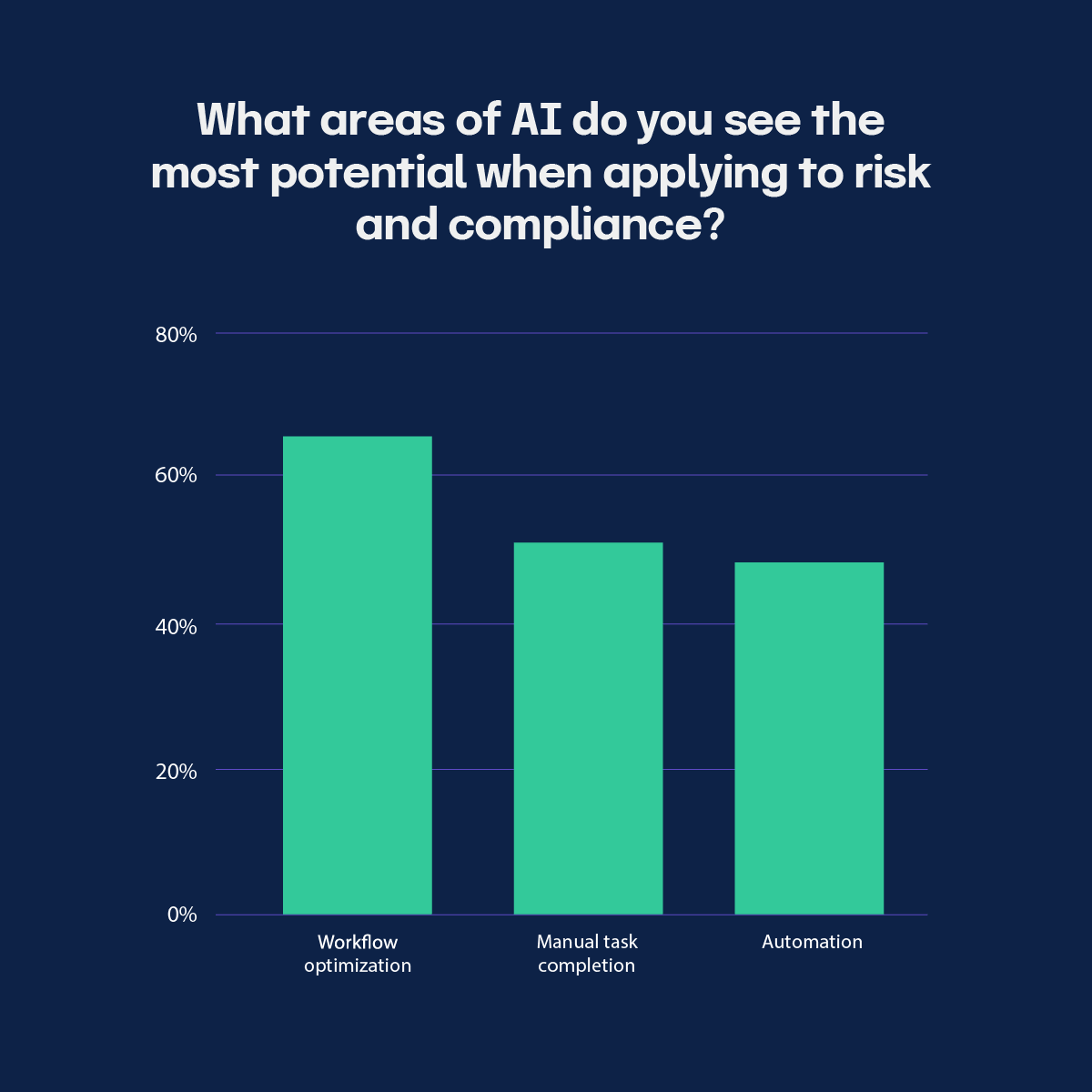

Looking forward, the security and compliance practitioners surveyed were asked what areas of AI they see the most potential in when applying it back to risk and compliance. 65% foresee AI as helping with workflow optimization, while 52% say AI will aid in manual task completion. Lastly, 49% expect AI to help the most when it comes to automation.

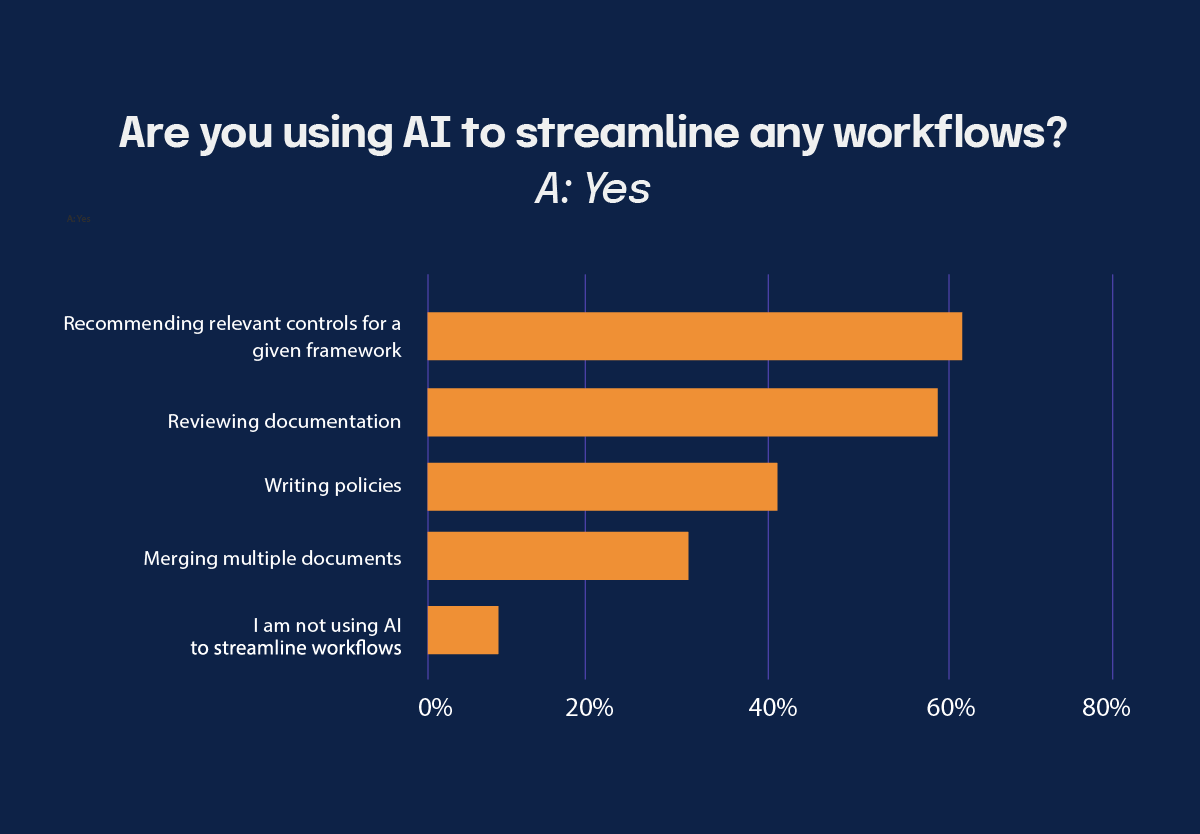

The ways respondents use AI does not stop there. We asked if they were using AI to streamline any workflows, and the majority said yes. 61% use AI to recommend relevant controls for a given framework, while 59% said they use it to review documentation. Another 41% use AI to write policies, and lastly 30% have it help merge multiple documents.

A mere 7% said they are not using AI to streamline their workflows.

Embracing AI with caution and curiosity

The findings from our annual benchmark report show a clear narrative: AI’s role in cybersecurity is complex, with both promising opportunities and formidable challenges. As AI continues to reshape the world of cybersecurity, organizations must adopt a cautious, yet curious approach: embrace the benefits of AI to enhance security and operational capabilities and remain aware of (and prepared for) the risks it introduces.

This balanced approach will require ongoing education, vigilant risk management, and a strategic commitment to aligning AI applications with both business objectives and regulatory requirements. Ultimately, by navigating this complex landscape with informed strategies and robust frameworks, organizations can harness AI’s potential to secure their operations against an increasingly dynamic threat environment, ensuring resilience and compliance in an AI-integrated future.

To learn more about the IT risk and compliance landscape in 2024, download the full IT Risk and Compliance Benchmark Report today.

See Hyperproof in Action

Related Resources

Ready to see

Hyperproof in action?